Key takeaways:

- Autonomous business is an operating model shift, not a tooling upgrade: move from input-first work (humans sequencing tasks) to outcome-first execution (agents orchestrating workflows to meet business goals).

- Agentic AI drives three irreversible changes in the enterprise operating model: workflows become outcome-driven, the workforce becomes human + digital workers, and decisions move toward closed-loop automation—similar to how Wi-Fi permanently removed “location” as a constraint on work.

- Most AI programs stall at the “data hourglass” bottleneck: data volume and demand explode, but static pipelines can’t deliver context fast enough for agents to act safely in real time.

- The winning foundation is a multimodal data fabric + active metadata augmentation: connect data across structured and unstructured sources, then add semantics/lineage/policy context so agents can reason—not just generate.

- Real-time outcomes require correlating weak signals across systems (e.g., fraud patterns within seconds). Speed is the advantage—but only if governance and semantic context are built in.

- Governance is non-negotiable: agent oversight, auditability, access controls, and guardrails keep autonomy aligned to enterprise objectives.

The shift CIOs can’t ignore: from input-first to outcome-first

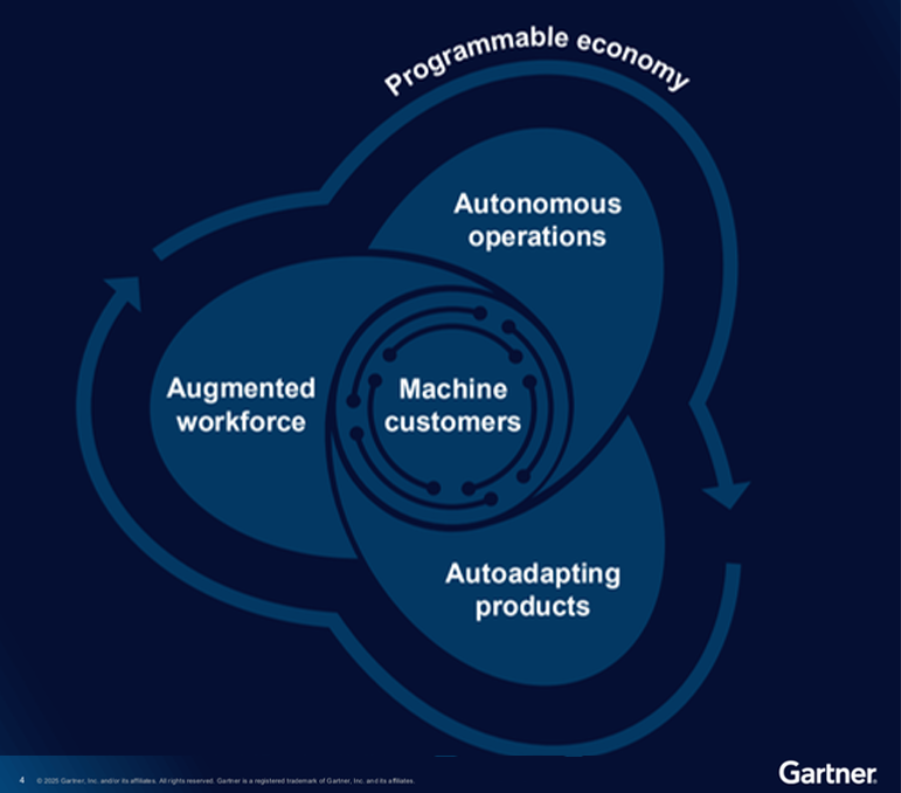

At Gartner IT Symposium Barcelona 2025, one signal was hard to miss: AI will reshape the enterprise operating model over the next decade—not as a feature, but as a structural change in how work gets done.

The destination is what Gartner frames as autonomous business—a strategy that uses self-improving, adaptable technology to make decisions, take action, and create new types of value. By 2035, the market leaders in at least one industry will operate this way—and everyone else will be competing at a permanent disadvantage.

To reach that destination, enterprises must flip the model:

- Input-first (legacy): humans manually sequence tasks (“extract X → analyze Y → email Z”).

- Outcome-first (autonomous): humans define the goal; agentic AI orchestrates the workflow dynamically to achieve it.

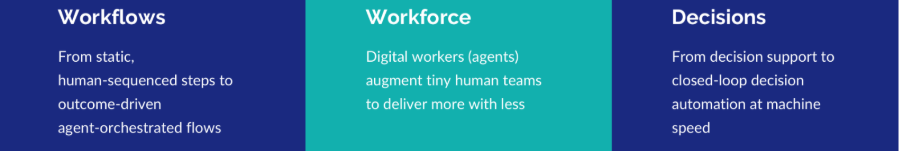

The three irreversible impacts of agentic AI on work

Gartner frames three “irreversible impacts” that together redefine how the enterprise operates:

- Workflows: shifting from static, human-sequenced steps to outcome-driven, agent-orchestrated flows.

- Workforce: digital workers (agents) augment small human teams to deliver more with less.

- Decisions: moving from decision support to closed-loop decision automation at machine speed.

Real-world analogy: what “irreversible” looks like

Cyril described “irreversible” change with a simple memory from 1998: a systems engineer opened a laptop in a corridor—no cable, no desk—and accessed email and network resources wirelessly. In that moment, the implication was obvious: work would never again be constrained to a fixed physical connection. Today we don’t even think about Wi-Fi—but that shift permanently altered how work happens. That’s the kind of irreversibility Gartner is pointing to with agentic AI’s impact on workflows, workforce, and decisions.

The enabling path: The architect and the synthesist

If the “North Star” is autonomous, outcome-first enterprise operations, the next question is: what enables it?

In the webinar, we anchor the enabling path in two strategic roles:

1) The Architect: build the technical engine

You don’t reach autonomy with legacy coding practices—too slow, too rigid. The path forward is AI-native development platforms that let humans and AI agents co-build systems from intent (natural language), enabling “tiny teams” to deliver disproportionate output.

Live webinar insight: Cyril emphasized that focusing only on platforms “used to build agents” can fall short. What scales is a unified platform that synthesizes data engineering, software engineering, and AI engineering through orchestration—so business teams can meaningfully participate (because domain context is the real constraint, not just tooling).

2) The Synthesist: orchestrate specialized intelligence

Autonomous operations don’t come from a single chatbot. They require:

- Multiagent systems (MAS): a “pit crew” of planning, execution, and critique agents working together.

- Domain-specific language models (DSLMs): enterprise-context models that reduce hallucinations and improve accuracy in regulated or high-stakes domains.

Together, these shifts explain why agentic AI is not “one product purchase.” It’s an architectural transition: platforms, orchestration, model strategy, and governance—built to scale.

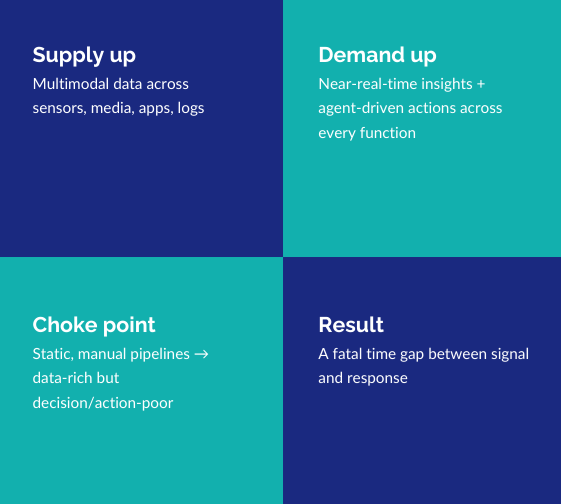

The bottleneck: why most enterprises are stalled (the data hourglass)

If the vision is clear and the tools exist, why are most organizations still stuck?

Because the enterprise is living inside a data hourglass:

- At the top: exploding multimodal data (SaaS, documents, streams, logs, sensors, media).

- At the bottom: exploding demand for near-real-time insights and actions—from every team, system, and agent.

- In the middle: the choke point—static, manual pipelines.

The result is a pattern I see repeatedly: organizations are data rich but decision- and action-latency constrained. AI agents may look impressive in a demo, but without contextualized, governed, timely data, they are functionally blind.

Example: fraud detection — input-first vs outcome-first

Cyril used a financial services scenario to show the shift:

- Input-first today: a product owner requests components like a rules engine, a fraud detection model, analyst queues, and post-incident reporting.

- Outcome-first tomorrow: a business leader asks an agent: “Cut fraud losses by 25% while minimizing customer friction.”

In the outcome-first model, agentic systems continuously optimize the trade-off between fraud loss and customer experience through orchestration—rather than maximizing a single metric in isolation.

Example: weak signals + one-second orchestration (why latency kills)

Cyril also described what “latency-unconstrained” decisioning looks like in practice. Imagine these events within a one-second window:

- A small $1.50 test charge on a rarely used card

- A mobile login from a new device

- A password reset attempt

- An IP/geolocation anomaly

- A wire transfer attempt

Individually, none of these crosses typical human thresholds. Collectively, an agent can correlate them into a high-confidence fraud pattern and take actions within that same second:

- Silently block the wire transfer

- Allow the $1.50 test charge (to avoid tipping off the attacker)

- Trigger step-up authentication

- Freeze the card token

- Open a fraud case with pre-populated evidence for human review

Humans cannot match this speed—even with real-time dashboards. The limiting factor becomes: can agents get the right data and context fast enough to act safely?

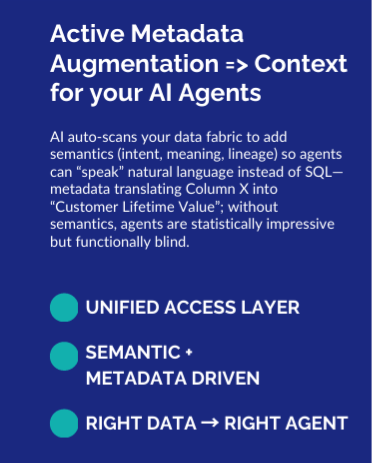

The foundation: Multimodal Data Fabric + Active Metadata Augmentation

The solution is not “a bigger data warehouse.” Forcing everything into one central lake does not scale with data variety, formats, and sources. The solution is a multimodal data fabric: a unified logical layer that weaves a net and connects data across sources—on-prem, cloud, SaaS, documents, and streams—to a common Semantic Layer with ontology and knowledge graphs.

But connectivity alone isn’t enough. The differentiator is what the webinar calls the “secret sauce”:

Active Metadata Augmentation = context for AI agents

AI agents don’t “speak SQL.” They operate in natural language and intent. To reason correctly, they need semantics: what data means, how it relates, where it came from, and what policies apply.

Active metadata augmentation uses AI to scan the fabric and add semantic knowledge—translating “Column X” into “Customer Lifetime Value,” attaching lineage, and providing business context. Without that semantic layer, agents are statistically impressive—but operationally blind.

Example: what “multimodal” really means (fraud + contact center + threat intel)

Continuing the fraud scenario, Cyril explained multimodality in a concrete way. You don’t just combine structured telemetry like:

- transaction velocity spikes

- new-device login risk scores

- event streams across channels

You also combine unstructured signals like:

- a contact center chat from 20 minutes ago: “I think my card has been compromised”

- a fraud bulletin describing new “test charge” patterns

This is the point: agents need situational context that lives across structured and unstructured sources—not in one neat table.

Example: the metadata you actually need (identity + relationship graphs)

Cyril broke down one practical piece: identity/entity resolution metadata. To detect weak signals, you need entity keys (customer ID, account ID, merchant ID, etc.), plus relationship graphs:

- customers ↔ devices

- accounts ↔ devices

- merchants ↔ accounts

Then you calculate link confidence scores (e.g., “this device belongs to this customer with X confidence”) using recency and stability metadata events like first seen/last seen, frequency, new-device flags, etc. Finally, you keep it active by continuously updating those relationships and link confidence scores as events stream in.

Where does this get stored?

Active metadata is typically stored centrally—often in a graph database (e.g., Neo4j)—complemented by vector embeddings in a vector store, with an ontology/knowledge-graph layer that can evolve over time.

Closing the loop: decision intelligence platform → decision automation

When you combine:

- Multimodal data fabric (foundation)

- Active metadata augmentation (context)

- Agentic networks (execution + orchestration)

you move toward a decision intelligence platform pattern and ultimately decision automation—the closed loop where data becomes context, context becomes decision, and decision becomes action. That is the practical path to autonomous business.

Example: composite AI in retail (churn prevention)

Cyril gave an example of “composite AI”: an agent preventing churn by combining multiple techniques:

- a supervised churn prediction model

- an unsupervised recommendation model

- autonomous generation of a personalized email offer (discounts/items)

The point is that decision intelligence platforms will blend techniques—not treat “AI” as one monolithic capability.

“Data becomes context. Context becomes decision. Decision becomes action. This is how you move from decision support to decision automation—and make the autonomous business model real.”

Governance by design: autonomy without losing control

As autonomy increases, so does risk. Organizations need explicit AI agent governance—objectives, accountability, oversight, and controls—so agents pursue the right outcomes in the right way.

In the webinar, Cyril positioned this as the “Vanguard” dimension: the trust infrastructure that makes autonomy deployable in real enterprises (explainability, accountability, controls)—even though it wasn’t the core focus of this particular session.

Where Calibo fits

From a delivery standpoint, there are two practical paths to getting moving—both anchored in the same foundation of governance + orchestration + self-service:

- Platform: a governed self-service orchestration platform with unified data, software, and AI engineering capabilities you can spin up quickly in your own cloud or as a managed service.

- Services: Digital Innovation as a Service—idea to production in weeks, built on the same foundation.

What to do next (a pragmatic 12-month sequence)

A simple execution path from the webinar:

- Now (0–3 months): pilot an AI-native dev platform; stand up a data fabric POC in one high-value domain; add metadata scanning.

- Next (3–6 months): launch your first MAS for a complex workflow; evaluate DSLM options; define AI guardrails.

- Later (6–12 months): converge on a decision intelligence platform pattern; scale fabric + agents with governance by design.

Q&A add-on: the single biggest structural reason enterprises stall

When asked why organizations struggle even with budget and tools, Cyril’s answer was blunt: teams often don’t prioritize data platform foundations early, and when they do, they try to boil the ocean instead of starting with one use case in one domain. He also stressed that without a semantic layer grounded in ontology and knowledge graphs that spans structured + unstructured data, enterprises won’t reach dependable outcomes.

Q&A add-on: the “first 30 days” move if you’re at zero

If you have no fabric, no metadata layer, no agentic pilots—Cyril’s first step was:

- pick a small but impactful use case

- identify the data sources that feed it

- start with a platform that can support data engineering → prep → experimentation → agentic building in a controlled sandbox

This becomes the foundation everything else builds on.

Get the full end-to-end narrative—from Gartner signals and the shift to outcome-first execution, to the data foundations (multimodal fabric + active metadata) required for safe, real-time autonomy.

Watch the on-demand webinar: The Road to Autonomous Business

FAQs

What’s the difference between agentic AI and traditional automation?

Traditional automation follows predefined rules and flows. Agentic AI is outcome-driven: it can plan, decide, and act (within bounds) to achieve goals, shifting workflows from static sequences to context-aware orchestration.

Why do AI agents fail in real enterprise environments?

Most failures aren’t model issues—they’re data issues. Enterprises are overwhelmed by multimodal data and constrained by static pipelines, so agents lack the context (semantics, lineage, meaning) needed to reason and act safely. Active metadata augmentation + a semantic layer (ideally grounded in ontology/knowledge graphs) is the missing layer.

How do we avoid building a fabric that only works for structured data?

In the Q&A, Cyril pointed out a common early trap: teams build semantic layers via JSON/YAML approaches that map well to structured warehouses, then stall when they try to support unstructured/RAG-driven use cases. The recovery path is an ontology-based semantic layer supported by knowledge graphs, with AI-assisted semantic model generation plus human refinement.

Trending articles

Data orchestration: why modern enterprises need a data orchestration platform

Data is pouring in from myriad sources—cloud applications, IoT sensors, customer interactions, legacy databases—yet without proper coordination, much of it remains untapped potential. This is where data orchestration comes in.

How Enterprise Architects can get more support for technology led innovation

Enterprise Architects are increasingly vital as guides for technology-led innovation, but they often struggle with obstacles like siloed teams, misaligned priorities, outdated governance, and unclear strategic value. The blog outlines six core challenges—stakeholder engagement, tool selection, IT-business integration, security compliance, operational balance, and sustaining innovation—and offers a proactive roadmap: embrace a “fail fast, learn fast” mindset; align product roadmaps with enterprise architecture; build shared, modular platforms; and adopt agile governance supported by orchestration tooling.

Why combine an Internal Developer Portal and a Data Fabric Studio?

Discover how to combine Internal Developer Portal and Data Fabric for enhanced efficiency in software development and data engineering.

The differences between data mesh vs data fabric

Explore the differences of data mesh data fabric and discover how these concepts shape the evolving tech landscape.

More from Calibo

One platform, whether you’re in data or digital.

Find out more about our end-to-end enterprise solution.